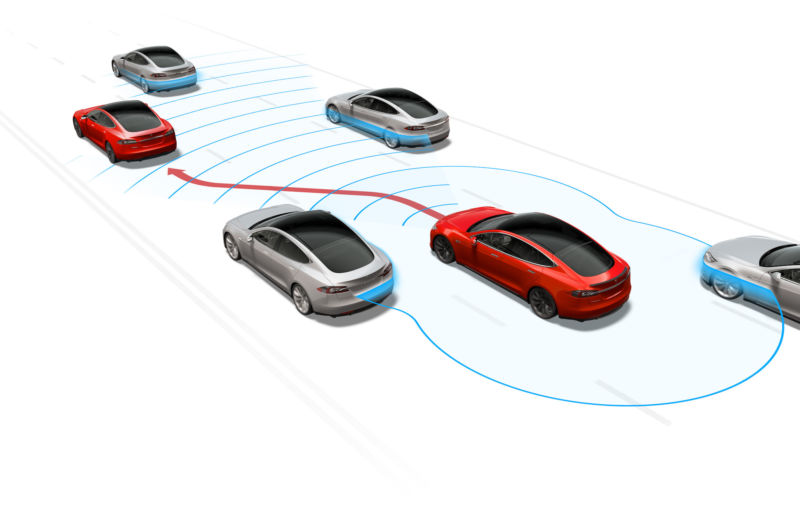

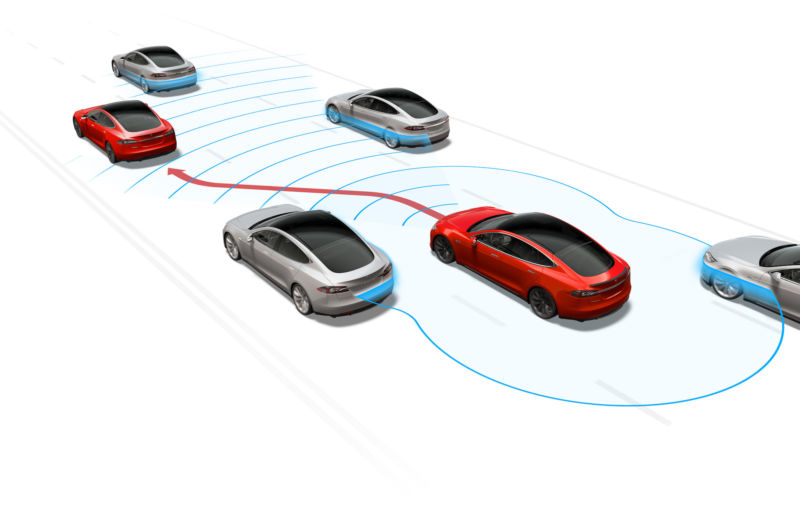

Enlarge / Tesla Autopilot is currently under investigation by the National Highway Traffic Safety Administration for 12 crashes and one death. Now the company is expanding access to an experimental version of the software. (credit: Tesla)

Over the weekend, Tesla expanded access to the latest version of the company’s highly controversial $10,000 automated driving feature. As is the Tesla way, CEO Elon Musk took to Twitter to announce the news, saying that owners could begin requesting access to the beta on Saturday. However, Musk noted that “FSD 10.1 needs another 24 hours of testing, so out tomorrow night.”

For now, access to the latest build of the software is by no means assured. Instead, drivers have to agree to have their driving monitored by Tesla for seven days. If they’re deemed safe drivers, they can have access to the experimental software. By contrast, autonomous vehicle companies like Argo AI put their test drivers through extensive training to ensure they’re able to safely supervise experimental autonomous driving systems while they are being tested on public roads, which is an entirely different task from that of safely driving a car manually.

Better not brake

Tesla says that five factors affect whether or not you’re safe enough a driver to then perform the task of supervising an unfinished automated driving system that is currently under investigation by the National Highway Traffic Safety Administration for a dozen crashes into parked emergency vehicles, including one fatality.