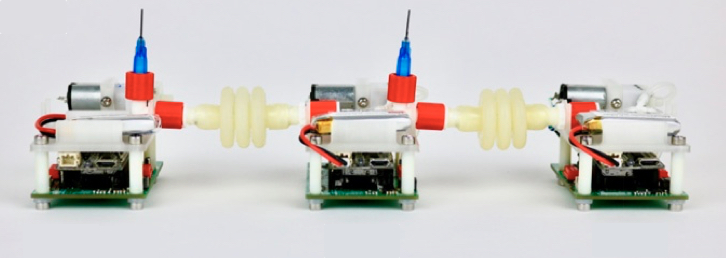

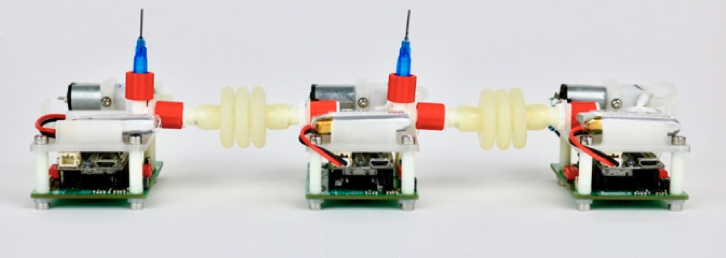

Enlarge / The robotic train. (credit: Oliveri et. al.)

One of the most impressive developments in recent years has been the production of AI systems that can teach themselves to master the rules of a larger system. Notable successes have included experiments with chess and Starcraft. Given that self-teaching capability, it’s tempting to think that computer-controlled systems should be able to teach themselves everything they need to know to operate. Obviously, for a complex system like a self-driving car, we’re not there yet. But it should be much easier with a simpler system, right?

Maybe not. A group of researchers in Amsterdam attempted to take a very simple mobile robot and create a system that would learn to optimize its movement through a learn-by-doing process. While the system the researchers developed was flexible and could be effective, it ran into trouble due to some basic features of the real world, like friction.

Roving robots

The robots in the study were incredibly simple and were formed from a varying number of identical units. Each had an on-board controller, battery, and motion sensor. A pump controlled a piece of inflatable tubing that connected a unit to a neighboring unit. When inflated, the tubing generated a force that pushed the two units apart. When deflated, the tubing would pull the units back together.