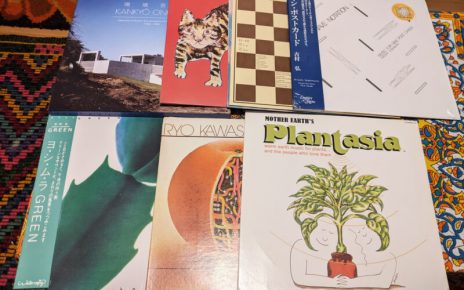

Enlarge (credit: Lewis Ogden / Flickr)

We don’t need a study to know that misinformation is rampant on social media; we just need to do a search for “vaccines” or “climate change” to confirm that. A more compelling question is why. It’s clear that, at a minimum, there are contributions from organized disinformation campaigns, rampant political partisans, and questionable algorithms. But beyond those, there are still a lot of people who choose to share stuff that even a cursory examination would show was garbage. What’s driving them?

That was the question that motivated a small international team of researchers, who decided to take a look at how a group of US residents decided on which news to share. Their results suggest that some of the standard factors that people point to when explaining the tsunami of misinformation—inability to evaluate information and partisan biases—aren’t having as much influence as most of us think. Instead, a lot of the blame gets directed at people just not paying careful attention.

You shared that?

The researchers ran a number of fairly similar experiments to get at the details of misinformation sharing. This involved panels of US-based participants recruited either through Mechanical Turk or via a survey population that provided a more representative sample of the US. Each panel had several hundred to over 1,000 individuals, and the results were consistent across different experiments, so there was a degree of reproducibility to the data.