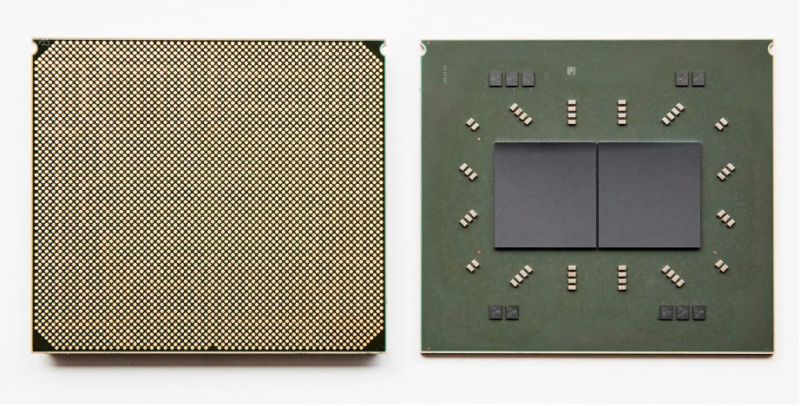

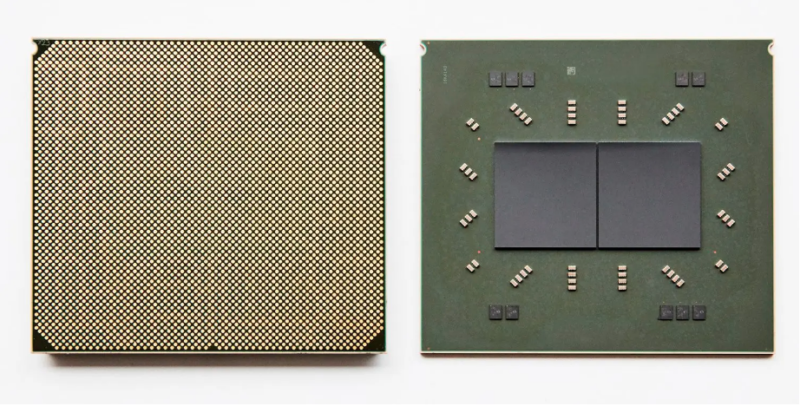

Enlarge / Each Telum package consists of two 7nm, eight-core / sixteen-thread processors running at a base clock speed above 5GHz. A typical system will have sixteen of these chips in total, arranged in four-socket “drawers.” (credit: IBM)

From the perspective of a traditional x86 computing enthusiast—or professional—mainframes are strange, archaic beasts. They’re physically enormous, power-hungry, and expensive by comparison to more traditional data-center gear, generally offering less compute per rack at a higher cost.

This raises the question, “Why keep using mainframes, then?” Once you hand-wave the cynical answers that boil down to “because that’s how we’ve always done it,” the practical answers largely come down to reliability and consistency. As AnandTech’s Ian Cutress points out in a speculative piece focused on the Telum’s redesigned cache, “downtime of these [IBM Z] systems is measured in milliseconds per year.” (If true, that’s at least seven nines.)

IBM’s own announcement of the Telum hints at just how different mainframe and commodity computing’s priorities are. It casually describes Telum’s memory interface as “capable of tolerating complete channel or DIMM failures, and designed to transparently recover data without impact to response time.”