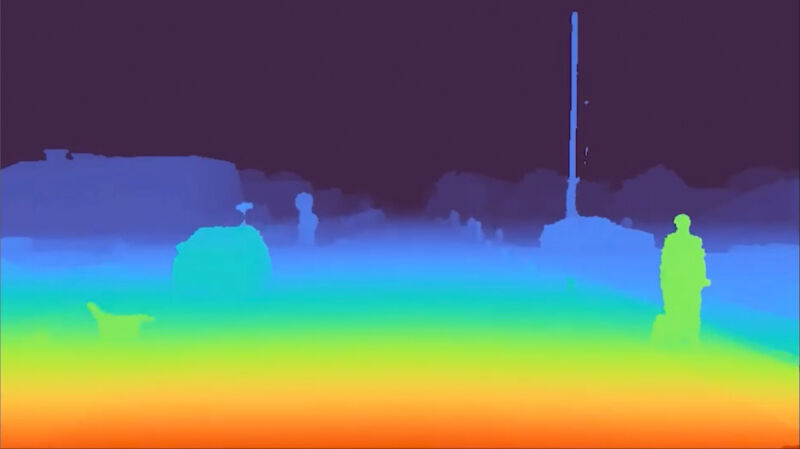

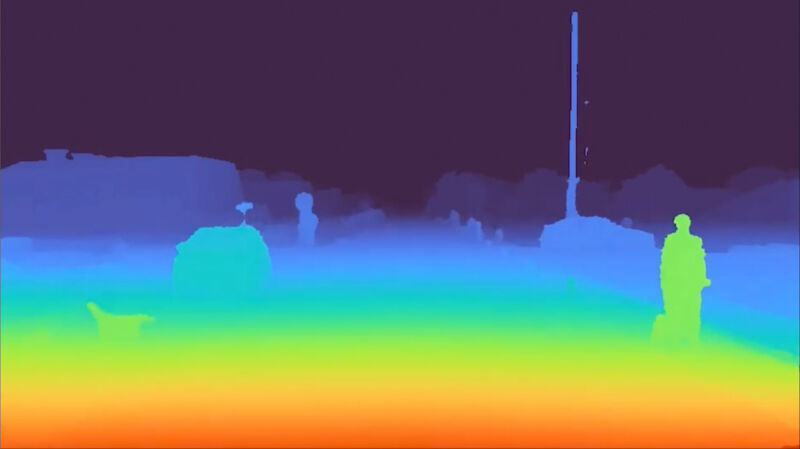

Enlarge / Light’s depth perception relies on trigonometry and allows it to measure the distance to each pixel out to 1,000 m. (credit: Light)

So far, almost every autonomous vehicle we’ve encountered uses lidar to determine how far away things are, just as the winners of the DARPA Grand Challenges did back in the early 2000s. But not every AV will use lidar in the future; there are other sensors reaching maturity, some of which may even do a better job. One sensor that recently caught my eye is developed from smartphone camera tech by a company called Light.

Light pivoted from its original position as a provider of cameras for smartphones to become a company that uses imaging technology for automotive applications like advanced driver assistance systems (aka ADAS) and AVs.

Specifically, Light developed an optical camera system, called Clarity, that can also calculate the distance to every pixel it sees. Knowing the exact distance to objects means there is no need for a separate lidar sensor, and it also means more accurate data for machine-learning algorithms (a billboard of a face wouldn’t be recognized as an actual human by Clarity, for example).